I/O

Having read in the data, we're now going to make sure all our agents have access to it.

Shared data is always dangerous in programming. One of the reasons global variables are discouraged is that we have no idea, when we access them, if some other bit of code is also accessing them. In general, having multiple pieces of code accessing one thing is not great. The exception is shared data and shared lists of objects. In the former, objects can purposefully interact with each other to achieve some task with a dataset (for example, processing different bits of it) and in the latter, we can develop object-to-object communication if each object has a shared list of all the other objects.

We're going to do both in this model: agents are going to work together on the environment, nibbling it down to nothing, and we'll make sure every agent has access to a shared list of all the agents so they can transfer resources/information between themselves. We'll do the latter in the next practical; in this we'll sort getting every agent access to the environment.

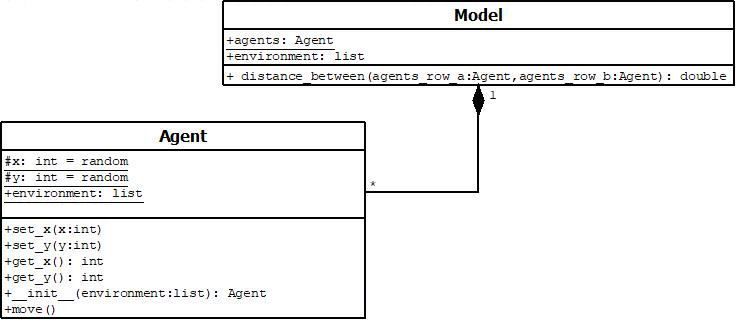

The safest way to share data and objects is to construct a special class for doing so, that controls access to the data. This is sometimes appropriate, for example, if you have a parallel model: that is, one where multiple computers are working on the same dataset. For here, however, that would be overkill. Instead, what we're going to do is pass the environment into each agent as we make it, and store the link to it as an instance variable. Here's the UML:

Make sure you make the environment list in the model.py file before you make the agents list, and that

you read in the data before you start moving the agents. Then adjust the creation of the agents to this (or similar):

agents.append(agentframework.Agent(environment))

You'll see we're passing the environment list into the Agent's constructor. Given this, we'll need to also adjust our Agent class to include this (we've not listed everything, just the relevant bits):

def __init__(environment):

self.environment = environment

self.store = 0 # We'll come to this in a second.

And that's us with a link set up inside our Agents. As each agent changes the environment, because environment is a mutable

object (a list), and the variable is therefore a link to the object passed in, all the Agents link to the same environment object (we could

have called it by another name, but it would still be a variable name linked to that object, because that's what we've passed in). As the

Agent's change the environment data, it changes for all agents. In environment was an immutable object, this wouldn't be

the case, as changing it would result in a new copy being generated (though Agents would be able to read the same data up until that point).

What all this means is we can add this method to our agents:

def eat(self): # can you make it eat what is left?

if self.environment[self.y][self.x] > 10:

self.environment[self.y][self.x] -= 10

self.store += 10

If we then call eat for each agent after we move it in model.py (this or similar):

for j in range(num_of_iterations):

for i in range(num_of_agents):

agents[i].move()

agents[i].eat()

We should now be able to display our environment data after this point, showing it nibbled away. Rather nicely, matplotlib will display two datasets at once for us, so we can adapt our final agent display to:

matplotlib.pyplot.xlim(0, 99)

matplotlib.pyplot.ylim(0, 99)

matplotlib.pyplot.imshow(environment)

for i in range(num_of_agents):

matplotlib.pyplot.scatter(agents[i].x,agents[i].y)

matplotlib.pyplot.show()

You'll note that matplotlib even adjusts the amount of the environment shown to match the area the agents are in, which is nice of it.

So, that's kind of cool. We've now got agents that interact with our environment. If you've got some time, have a think about implementing the following:

Using the lecture notes, can you write out the environment as a file at the end?

Can you make a second file that writes out the total amount stored by all the agents on a line? Can you get the model to append the data to the file, rather than clearing it each time it runs?

Can you override __str__(self) in the agents, as mentioned in the lecture on classes, so that they display this

information information about their location and stores when printed?

Can you get the agents to wander around the full environment by finding out the size of environment inside the agents, and using the size when you randomize their starting locations and deal with the boundary conditions?

At the moment, the agents only eat 10 units at a time. This will leave a few units in each area, even if intensly grazed. Can you get them to eat the last few bits, if there's less than 10 left, without leaving negative values?

Can you get the agents to sick up their store in a location if they've been greedy guts and eaten more than 100 units? (note that when you add or subtract from the map, the colours will re-scale).